Connect dbt models to Mage Pro blocks (Step-by-step tutorial)

September 2, 2025

TLDR

This article details how to integrate your dbt models with Mage Pro pipelines to load data into BigQuery. You'll learn how to create a batch pipeline, connect a Mage Pro data loader to a dbt block, configure the necessary YAML files, and execute your dbt models to create both seed tables and views in your data warehouse, all within the Mage Pro environment. This process streamlines your data transformations and ensures a smooth migration of your dbt workflows.

Table of contents

Introduction

Creating your Mage Pro pipeline and data loader

Integrating your dbt model

Configuring your source connections in Mage Pro

Running your pipeline and verifying data in BigQuery

Conclusion

Introduction

In Part 1 of our migration series, we demonstrated how moving from dbt Cloud to Mage Pro could be accomplished in under 10 minutes. Now that your dbt project is successfully migrated, it's time to showcase the real power of Mage Pro: orchestrating your dbt models within data pipelines.

This article will show you how to connect your newly migrated dbt models to Mage Pro pipelines, creating a data workflow that fetches data, transforms it through your dbt models, and loads the results into BigQuery. If you haven't completed Part 1 yet, check out our dbt migration blog first to get your dbt project migrated to Mage Pro.

Creating your Mage Pro pipeline and data loader

Now that your dbt project is migrated, let's create your first integrated pipeline. Here's how to get started:

Navigate to pipelines: From the Mage Pro homepage, hover over the left navigation menu and select "Pipelines."

Create a new pipeline: Click the green "New pipeline" button. Select the Batch pipeline option and give your pipeline a memorable name like "My dbt Integration Pipeline."

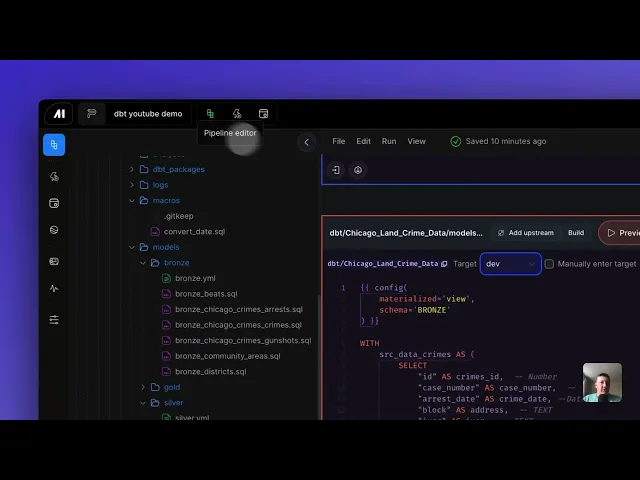

Add a data loader block: Once in the pipeline editor, click the "Blocks" button, hover over the "Loader" option, and select the appropriate loader type for your data source (API, Database, File, etc.).

For this integration, you can use any data source that fits your project. Your data loader block will handle:

Fetching data from your chosen source (APIs, databases, files, etc.)

Performing initial data cleaning and validation

Running tests to ensure data quality

Utilizing secrets for secure access to protected resources

Run the data loader: Execute the data loader block to verify it's successfully extracting your data. You should see a preview of your extracted data in the output.

Data loader block example:

Integrating your dbt model

With your data loader successfully pulling data, it's time to connect your migrated dbt models.

Add a dbt block: Click the "Blocks" button again, scroll down to "dbt," and select "model or snapshot from file."

Select your dbt model: Choose the dbt model file you want to integrate (for example,

bronze_customer_data.sqlor any model from your migrated project) and click "Add to pipelines."Connect the blocks: Visually connect your Mage Pro data loader block to your newly added dbt block by dragging a connector between them.

This connection is crucial - it's what allows your dbt models to reference the data from your Mage Pro blocks.

Configuring your source connections in Mage Pro

Once you’ve added your dbt block and connected it to a Mage Pro data loader or transformer block you essentially generate a source seed. The connection will automatically generate a mage_sources.yml file. This file acts as the bridge between your Mage Pro blocks and your dbt models.

After connecting the blocks you should take the following steps:

Locate mage_sources.yaml: After connecting the blocks, refresh your file editor page and navigate to your dbt folder. You should find the

mage_sources.yamlfile.Update source connections: You'll need to replace the source tables with Mage specific sources:

The first source connection should be the name of your dbt source (e.g.,

mage_your_project_name)The second source connection will be the full name of your Mage Pro data loader block (e.g.,

Your_Pipeline_Name_Your_Data_Loader_Block_Name)Important: Use single quotes around these names in the YAML file

This configuration ensures your dbt models can correctly reference the data provided by your Mage Pro data loader.

Sample mage_sources.yml file:

Running your pipeline and verifying data in your warehouse

With all configurations in place, let's execute your integrated pipeline and see the results in your data warehouse.

Run the pipeline: In the pipeline editor, click the "Preview" button to initiate the pipeline run. The initial run might take around 20 seconds as Mage Pro orchestrates both the data loading and dbt transformation.

Verify seed table in your warehouse: After the pipeline completes, check your data warehouse environment. You should find a seed table created in your specified dataset or schema. The table name will follow the pattern mage.<pipeline_name>_<data_loader_block_name>.

Run dbt models for views/tables: To create views or tables from your dbt models, open the Mage terminal:

Execute:

dbt run --select <your_model_name>(replace with your specific model name)This command will successfully create your dbt transformations in your warehouse

Verify transformations in your warehouse: Return to your data warehouse interface. You should now see new datasets/schemas and views/tables with your transformed data. Run a quick query to verify the data is processed correctly.

Conclusion

Successfully connecting your dbt models to Mage Pro pipelines and loading data into BigQuery demonstrates a robust approach to data engineering. This process simplifies the migration of dbt projects, enables efficient data extraction and transformation, and ensures accurate data delivery to your data warehouse. Mage Pro's visual pipeline development, built-in connectivity, and orchestration capabilities make it a powerful platform for managing complex data workflows.

Want to consolidate your data stack under one tool? Schedule a free demo with Mage to get started today!