Guest post

Getting started with Apache Flink: A guide to stream processing

June 1, 2023

TLDR

This guide introduces Apache Flink and stream processing, explaining how to set up a Flink environment and create simple applications. Key Flink concepts are covered along with basic troubleshooting and monitoring techniques. It ends with resources for further learning and community support.

Outline

Introduction to Apache Flink and stream processingSetting up a Flink development environmentA simple Flink application walkthrough: data ingestion, processing, and outputUnderstanding Flink’s key concepts (DataStream API, windows, transformations, sinks, sources)Basic troubleshooting and monitoring for Flink applicationsConclusion

Introduction to Apache Flink and Stream Processing

Apache Flink is an open-source, high-performance framework designed for large-scale data processing, excelling at real-time stream processing. It features low-latency and stateful computations, enabling users to process live data and generate insights on-the-fly. Flink is fault-tolerant, scalable, and provides powerful data processing capabilities that cater to various use cases.

Stream processing, on the other hand, is a computing paradigm that allows real-time data processing as soon as it arrives or is produced. Unlike traditional batch processing systems that deal with data at rest, stream processing handles data in motion. This paradigm is especially useful in scenarios where insights need to be derived immediately, such as real-time analytics, fraud detection, and event-driven systems. Flink's powerful stream-processing capabilities and its high-throughput, low-latency, and exactly-once processing semantics make it an excellent choice for such applications.

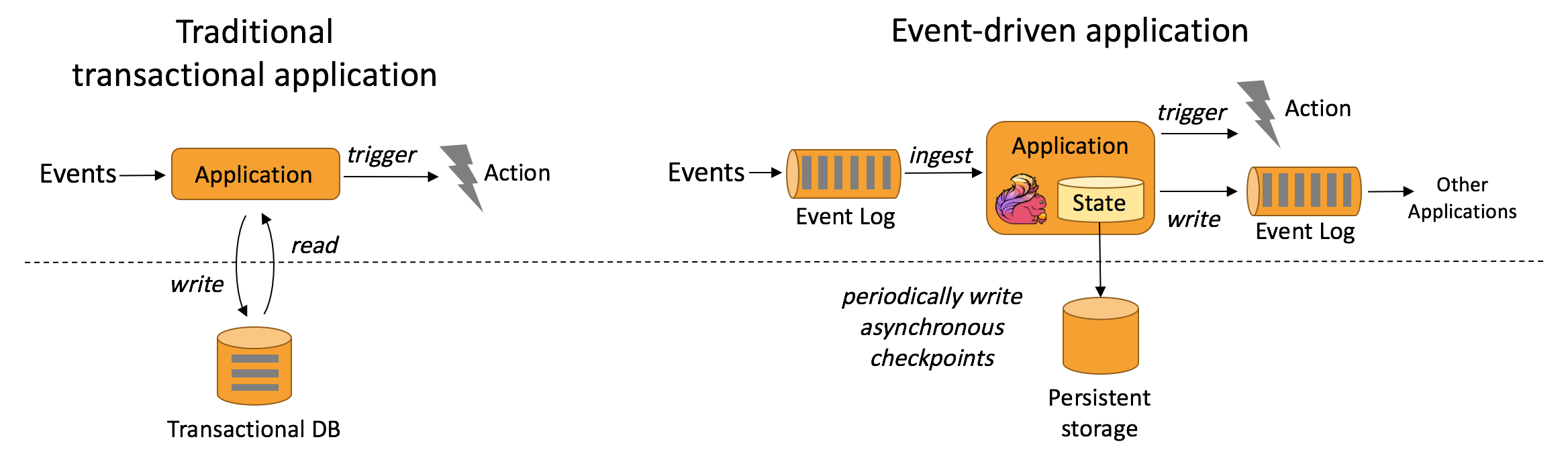

Source: Flink

Setting up a Flink development environment

Setting up a development environment for Apache Flink is a straightforward process. Here's a brief step-by-step guide:

Install Java: Flink requires Java 8 or 11, so you need to have one of these versions installed on your machine. You can download Java from the Oracle website or use OpenJDK.

Download and Install Apache Flink: You can download the latest binary of Apache Flink from the official Flink website. Once downloaded, extract the files to a location of your choice.

Start a Local Flink Cluster: Navigate to the Flink directory in a terminal, then go to the

binfolder. Start a local Flink cluster using the command./start-cluster.sh(for Unix/Linux/macOS) or start-cluster.bat (for Windows).Check Flink Dashboard: Open a web browser and visit http://localhost:8081, You should see the Flink Dashboard, indicating that your local Flink cluster is running successfully.

Set up an Integrated Development Environment (IDE): For writing and testing your Flink programs, you can use an IDE such as IntelliJ IDEA or Eclipse. Make sure to also install the Flink plugin if your IDE has one.

Create a Flink Project: You can create a new Flink project (See Apache Flink Playground) using a build tool like Maven or Gradle. Flink provides quickstart Maven archetypes to set up a new project easily.

Once you've set up your Flink development environment, you're ready to start developing Flink applications. Remember that while this guide describes a basic local setup, a production Flink setup would involve a distributed cluster and possibly integration with other big data tools.

A simple Flink application walkthrough: Data ingestion, Processing and Output

A simple Apache Flink application can be designed to consume a data stream, process it, and then output the results. Let's walk through a basic example:

Data Ingestion (Sources): Flink applications begin with one or more data sources. A source could be a file on a filesystem, a Kafka topic, or any other data stream.

Data Processing (Transformations): Once the data is ingested, the next step is to process or transform it. This could involve filtering data, aggregating it, or applying any computation.

Data Output (Sinks): The final step in a Flink application is to output the processed data, also known as a sink. This could be a file, a database, or a Kafka topic.

Job Execution: After defining the sources, transformations, and sinks, the Flink job needs to be executed.

Here's a complete example that reads data from a Kafka topic, performs some basic word count processing on the stream, and then writes the results into a Cassandra table. This example uses Java and Flink's DataStream API.

Understanding Flink’s key concepts

DataStream API: Flink's main tool for creating stream processing applications, providing operations to transform data streams.

Windows: Defines a finite set of stream events for computations, based on count, time, or sessions.

Transformations: Operations applied to data streams to produce new streams, including map, filter, flatMap, keyBy, reduce, aggregate, and window.

Sinks: The endpoints of Flink applications where processed data ends up, such as a file, database, or message queue.

Sources: The starting points of Flink applications that ingest data from external systems or generate data internally, such as a file or Kafka topic.

Event Time vs. Processing Time: Flink supports different notions of time in stream processing. Event time is the time when an event occurred, while processing time is the time when the event is processed by the system. Flink excels at event time processing, which is crucial for correct results in many scenarios.

CEP (Complex Event Processing): Flink supports CEP, which is the ability to detect patterns and complex conditions across multiple streams of events.

Table API & SQL: Flink offers a Table API and SQL interface for batch and stream processing. This allows users to write complex data processing applications using a SQL-like expression language.

Stateful Functions (StateFun): StateFun is a framework by Apache Flink designed to build distributed, stateful applications. It provides a way to define, manage, and interact with a dynamically evolving distributed state of functions.

Operator Chain and Task: Flink operators (transformations) can be chained together into a task for efficient execution. This reduces the overhead of thread-to-thread handover and buffering.

Savepoints: Savepoints are similar to checkpoints, but they are triggered manually and provide a way to version and manage the state of Flink applications. They are used for planned maintenance and application upgrades.

State Management: Flink provides fault-tolerant state management, meaning it can keep track of the state of an application (e.g., the last processed event) and recover it if a failure occurs.

Watermarks: These are a mechanism to denote progress in event time. Flink uses watermarks to handle late events in stream processing, ensuring the system can handle out-of-order events and provide accurate results.

Checkpoints: Checkpoints are a snapshot of the state of a Flink application at a particular point in time. They provide fault tolerance by allowing an application to revert to a previous state in case of failures.

Basic troubleshooting and monitoring in Flink

Troubleshooting and monitoring are essential aspects of running Apache Flink applications. Here are some key concepts and tools:

Flink Dashboard: This web-based user interface provides an overview of your running applications, including statistics on throughput, latency, and CPU/memory usage. It also allows you to drill down into individual tasks to identify bottlenecks or issues.

Logging: Flink uses SLF4J for logging. Logs can be crucial for diagnosing problems or understanding the behavior of your applications. Log files can be found in the log directory in your Flink installation.

Metrics: Flink exposes a wide array of system and job-specific metrics, such as the number of elements processed, bytes read/written, task/operator/JobManager/TaskManager statistics, and more. These metrics can be integrated with external systems like Prometheus or Grafana.

Exceptions: If your application fails to run, Flink will throw an exception with a stack trace, which can provide valuable information about the cause of the error. Reviewing these exceptions can be a key part of troubleshooting.

Savepoints/Checkpoints: These provide a mechanism to recover your application from failures. If your application isn't recovering correctly, it's worth investigating whether savepoints/checkpoints are being made correctly and can be successfully restored.

Backpressure: If a part of your data flow cannot process events as fast as they arrive, it can cause backpressure, which can slow down the entire application. The Flink dashboard provides a way to monitor this.

Network Metrics: Flink provides metrics on network usage, including buffer usage and backpressure indicators. These can be useful for diagnosing network-related issues.

Remember, monitoring and troubleshooting are iterative processes. If you notice performance degradation or failures, use these tools and techniques to investigate, identify the root cause, and apply a fix. Then monitor the system again to ensure that the problem has been resolved.

Conclusion

In conclusion, Apache Flink is a robust and versatile open-source stream processing framework that enables fast, reliable, and sophisticated processing of large-scale data streams. Starting with a simple environment setup, we've walked through creating a basic Flink application that ingests, processes, and outputs data. We've also touched on the foundational concepts of Flink, such as the DataStream API, windows, transformations, sinks, and sources, all of which serve as building blocks for more complex applications.