Lesson

4.11 Practical exercise

Scenario

You're working as a data engineer for NYC's Performance Analytics team. Your task is to build a daily data pipeline that ingests NYC 311 service request data. You need to create a data loader block that fetches yesterday's complete dataset of service requests to ensure you capture all daily activity for analysis.

Exercise Requirements

Create a data loader block that will:

Input: NYC Open Data API (Socrata)

Output: Raw DataFrame with all NYC 311 service request data

Date Range: Previous day from 12:00:01 AM to 11:59:59 PM

Goal: Fetch all available columns for complete daily batch processing

Step-by-Step Implementation

Step 1: Add Data Loader Block

In your Mage pipeline, click the "Blocks" button

Hover over "Data loader" and select "API"

Name the block:

load_daily_nyc_311_dataClick "Save and add"

Step 2: Replace Template Code

Clear the template code and implement the following:

Step 3: Run and Test

Click the Run button (▶️) to execute your data loader

Review the console output to confirm:

The date range being fetched

Number of records loaded

Number of columns returned

Check that the test passes successfully

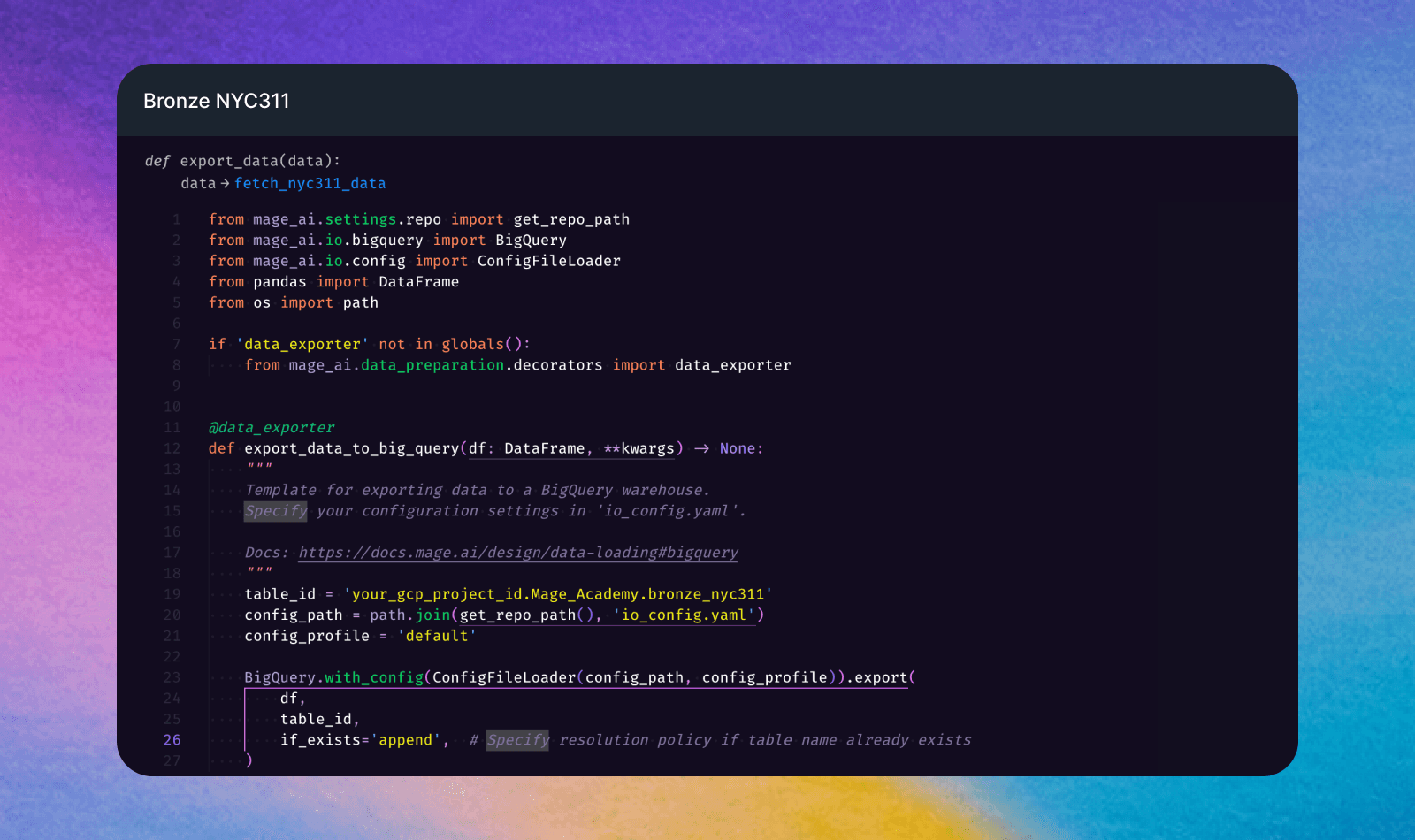

Step 4: Add data exporter block

In your Mage pipeline, click the "Blocks" button

Hover over "Data exporter" then “data warehouses” and select "API"

Name the block:

bronze_nyc311Click "Save and add"

Change the

table_idto your BigQuery table id and the resolution policy toappend

Expected Results

Console Output: Date range confirmation and record count

DataFrame: Raw NYC 311 data

Test Results: ✅ Successful validation of core columns

Daily Coverage: Complete dataset for the previous 24-hour period

Why This Approach?

This data loader design follows best practices for daily batch processing:

Consistent Daily Batches: Always processes complete days of data

No Data Cleaning: Keeps raw data intact for bronze layer

All Columns: Preserves complete dataset structure for downstream analysis

Reliable Date Logic: Works regardless of when the pipeline runs